Server side rendering

Panaudia uses server side rendering to bring together large numbers of people in virtual spaces and give them all reliable, many-to-many, spatialised audio.

There are several quite different technical approaches to working with spatial audio across networks, these break down into three broad categories:

- Broadcasting spatial audio

- Client side rendering

- Server side rendering - which is what we do.

Broadcasting spatial audio

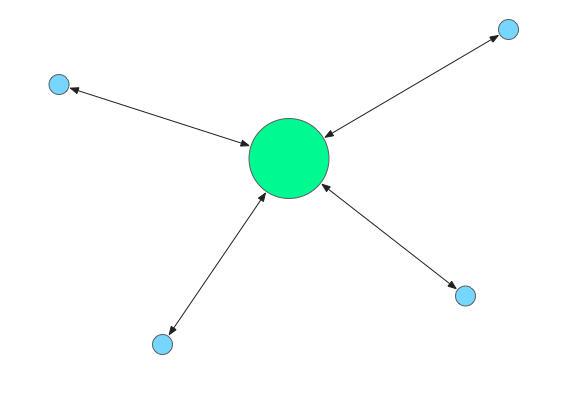

A single spatialised audio mix is created on the server, delivered to clients as a multichannel or ambisonic stream which is then rendered on the clients.

It is relatively easy to generate an ambisonic mix of something like a concert, distribute this to very large numbers of clients which can then rotate and render the sound field locally.

Almost infinite scaling can be achieved with CDNs and there are several mature and well-supported rendering technologies such as Dolby Atmos, or Apple's Airpod Spatial Audio, that can easily be integrated into clients to support this pattern.

The problem with broadcasting is that it's only broadcasting. It's a one way distribution of content that neither takes in streams of audio from clients to be included in the mix, nor delivers individualised mixes to each client based on their position in a virtual audio space. If it's what you want, ambisonic broadcasting is a great solution, but if you need many-to-many spatial audio it's unsuitable.

Client side rendering

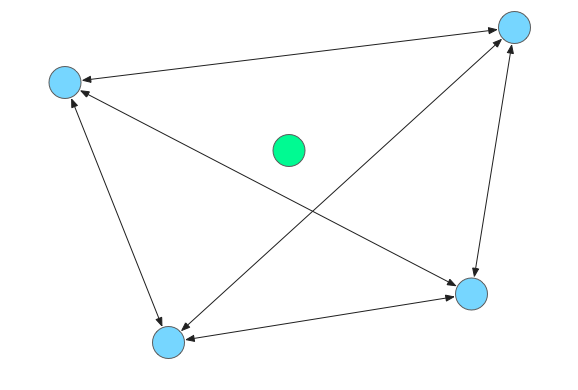

In contrast, client side rendering supports two-way, many-to-many spatialised audio that can be used for things like virtual chat rooms. You send everyone's audio to everyone and make use of their local processing power to do the mixing and the rendering, and the central server only has to do a bit of signalling.

There are quite a few libraries available, both native and browser based, for doing client side spatialised audio mixing and rendering, for example three.js directly supports positional audio and peer.js makes peer-to-peer WebRTC simple.

This works very well for smaller numbers of people as the audio can be sent peer-to-peer and the computation is distributed between them, but as you increase the numbers you place more load on each node's cpu and network.

You can't add many more nodes before the local networking and cpu capacity of heterogeneous client devices cannot keep up and things start breaking.

Server side rendering

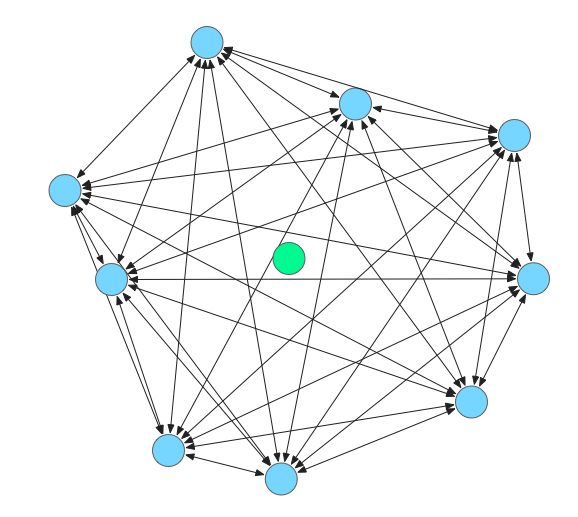

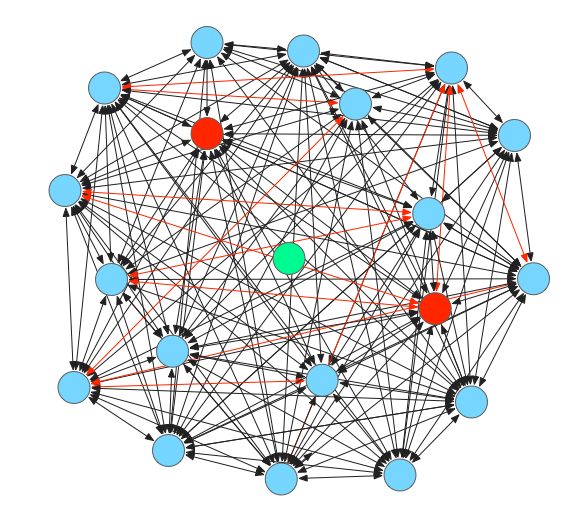

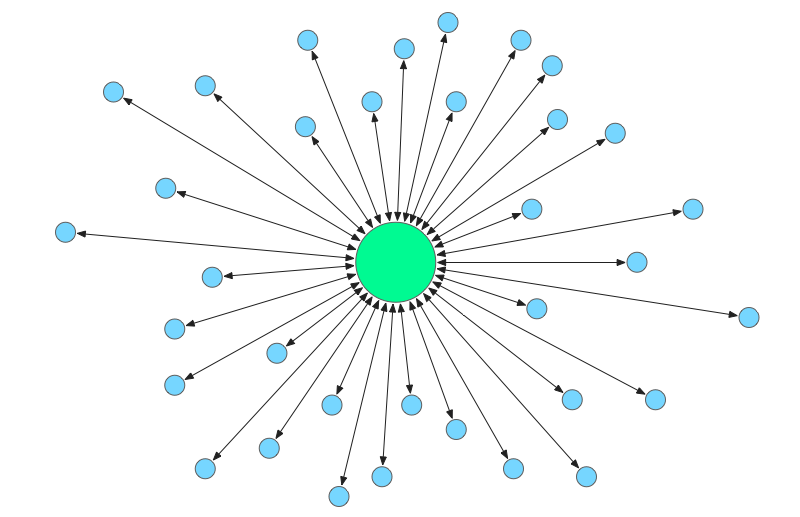

Server side rendering also supports rich many-to-many spatial audio, but compared to client side you do very little at the client end, only sending single streams to and from a server. All the hard work of mixing and then rendering the spatialised audio is done centrally. The down-side of this approach is that you need to use more cpu in the cloud as the audio processing moves from the edge to the centre.

The up-side is that the clients are very slight in terms of complexity, network and cpu usage, making integration easier and connections more robust. More importantly you can mix many, many more audio sources together than is possible with client side rendering.

Panaudia may not be the best choice for spatialised audio for very small groups of people, or if you want to broadcast the same ambisonic mix to many thousands, but if you want large groups of people to come together in virtual space Panaudia is a good choice.